As some might have noticed we now have Linux 5.7 in Debian/unstable and

subsequently the in kernel

exFAT

implementation created by Samsung available. Thus we now have two exFAT

implementations, the

exfat fuse driver

and the Linux based one. Since some comments and mails I received showed

minor confusions, especially around the available tooling, it might help

to clarify a bit what is required when.

Using the Samsung Linux Kernel Implementation

Probably the most common use case is that you just want to use the

in kernel implementation. Easy, install a Linux 5.7 kernel package

for your architecture and either remove the

exfat-fuse package

or make sure you've version

1.3.0-2 or later installed. Then

you can just run

mount /dev/sdX /mnt and everything should be fine.

Your result will look something like this:

$ mount grep sdb

/dev/sdb on /mnt type exfat (rw,relatime,fmask=0022,dmask=0022,iocharset=utf8,errors=remount-ro

In the past this basic mount invocation utilized the

mount.exfat

helper, which was just a symlink to the helper which is shipped

as

/sbin/mount.exfat-fuse. The link was dropped from the package

in

1.3.0-2. If you're running a not so standard setup, and would

like to keep an older version of

exfat-fuse installed you must

invoke

mount -i to prevent mount from loading any helper to mount

the filesystem. See also

man 8 mount.

For those who care, mstone@ and myself had a brief discussion

about this issue in

#963752,

which quickly brought me to the conclusion that it's in the best interest

of a majority of users to just drop the symlink from the package.

Sticking to the Fuse Driver

If you would like to stick to the fuse driver you can of course just do it.

I plan to continue to maintain all packages for the moment. Just keep the

exfat-fuse package installed and use the

mount.exfat-fuse helper

directly. E.g.

$ sudo mount.exfat-fuse /dev/sdb /mnt

FUSE exfat 1.3.0

$ mount grep sdb

/dev/sdb on /mnt type fuseblk (rw,nosuid,nodev,relatime,user_id=0,group_id=0,default_permissions,allow_other,blksize=4096)

In case this is something you would like to make permanent, I would recommend

that you create yourself a

mount.exfat symlink pointing at the

mount.exfat-fuse helper.

mkfs and fsck - exfat-utils vs exfatprogs

Beside of the filesystem access itself we now also have two implementations

of tooling, which support filesystem creation, fscking and label adjustment.

The older one is

exfat-utils

by the creator of the fuse driver, which is also part of Debian since the

fuse driver was packaged in 2012. New in Debian is the

exfatprogs package

written by the Samsung engineers. And here the situation starts to get

a bit more complicated.

Both packages ship a

mkfs.exfat and

fsck.exfat tool, so we can not

co-install them. In the end both packages declare a conflict with each

other at the moment. As outlined in

this thread I do not plan to overcomplicate the situation by using

the alternatives system. I do feel strongly that this would just create

more confusion without a real benefit. Since the tools do not have

matching cli options, that could also cause additional issues and confusion.

I plan to keep that as is, at least for the bullseye release. Afterwards

it's possible, depending on how the usage evolves, to drop the

mkfs.exfat and

fsck.exfat from exfat-utils, they are in fact

again only symlinks. pain point might be tools interfacing with the

differing implementations. Currently I see only three reverse depedencies,

so that should be manageable to consolidate if required.

Last but not least it might be relevant to mention that the

exfat-utils

package also contains a

dumpexfat tool which could be helpful if you're

more into forensics, or looking into other lower level analysis of an exFAT

filsystem. Thus there is a bit of an interest to have those tools co-installed

in some - I would say - niche cases.

buster-backports

Well if you use buster with a backports kernel you're a bit on your own.

In case you want to keep the fuse driver installed, but would still like to

mount, e.g. for testing, with the kernel exFAT driver, you must use

mount -i.

I do not plan any uploads to buster-backports. If you need a

mkfs.exfat

on buster, I would recommend to just use the one from

exfat-utils for

now. It has been good enough for the past years, should not get sour

before the bullseye release, which ships exfatprogs for you.

Kudos

My sincere kudos go to:

- Andrew Nayenko who wrote the exFAT fuse implemetation which was very helpful

to many people for the past years. He's a great upstream to work with.

- Namjae Jeon and Hyunchul Lee who maintain the Linux exFAT driver and exfatprogs.

They are also very responsive upstreams and easy to work with.

- Last but not least our ftp-master who reviewed the

exfatprogs package

way faster than what I had anticipated looking at the current

NEW backlog.

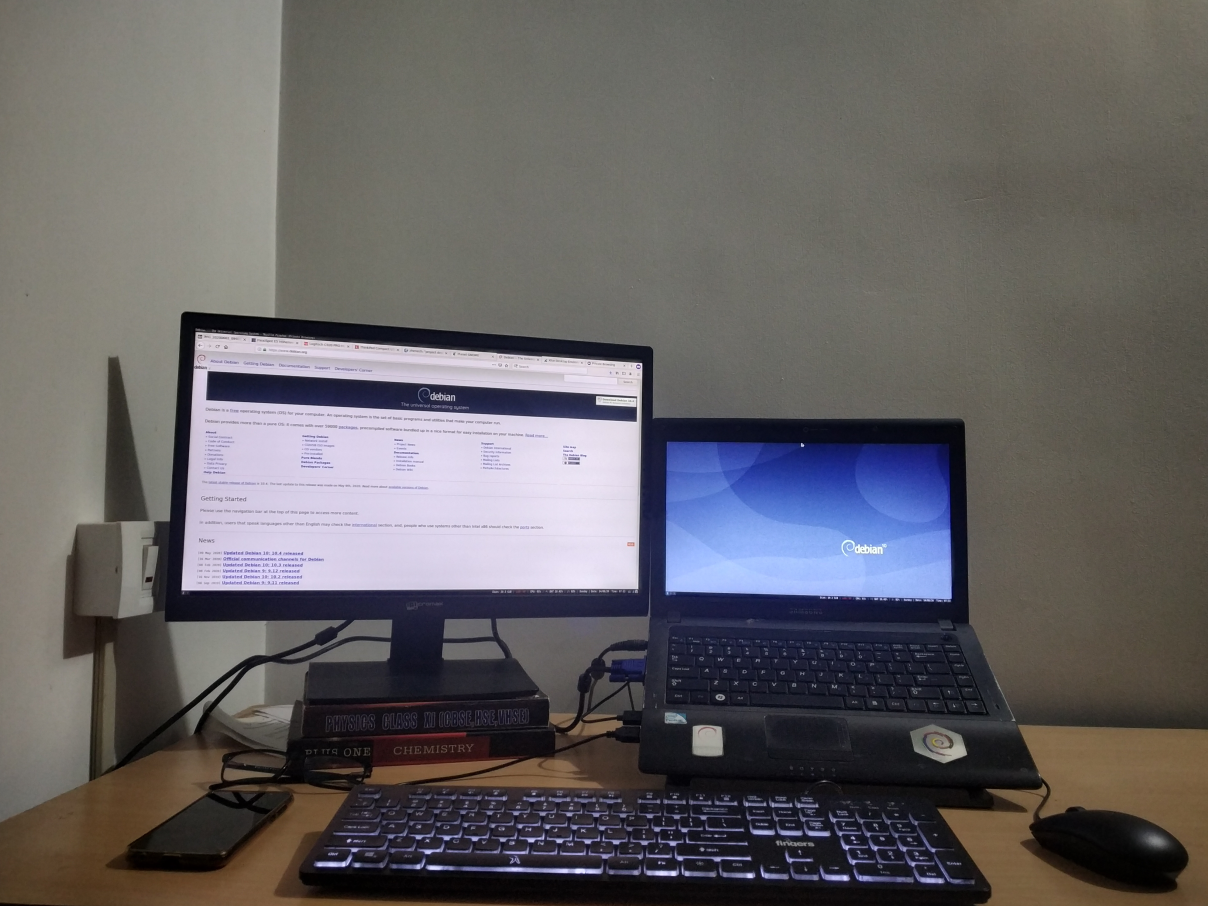

Hello,

Recently I ve seen lot of people sharing about their home office

setup. I thought why don t I do something similar. Not to beat FOMO,

but in future when I revisit this blog, it will be lovely to

understand that I had some cool stuffs.

There are people who went deep down in the ocean to lay cables for me

to have a remote job and I am thankful to them.

Being remote my home is my office. On my work table I have a Samsung R439 laptop. I ve blogged about it

earlier. New addition is that it

have another 4GB RAM, a total of 6GB and 120GB SSD. I run Debian

testing on it. Laptop is placed on a stand. Dell MS116 as external mouse always

connected to it. I also use an external keyboard from fingers. The keys are very stiff so

I don t recommend this to anyone. The only reason I took this keyboard that it is in my budget and have a backlit,

which I needed most.

I have a Micromax MM215FH76 21 inch monitor as my secondary display which stacked up on

couple of old books to adjust the height with laptop stand. Everything is ok with this

monitor except that it don t have a HDMI point and stand is very

weak. I use i3wm and this small script help me to manage my monitor

arrangement.

Hello,

Recently I ve seen lot of people sharing about their home office

setup. I thought why don t I do something similar. Not to beat FOMO,

but in future when I revisit this blog, it will be lovely to

understand that I had some cool stuffs.

There are people who went deep down in the ocean to lay cables for me

to have a remote job and I am thankful to them.

Being remote my home is my office. On my work table I have a Samsung R439 laptop. I ve blogged about it

earlier. New addition is that it

have another 4GB RAM, a total of 6GB and 120GB SSD. I run Debian

testing on it. Laptop is placed on a stand. Dell MS116 as external mouse always

connected to it. I also use an external keyboard from fingers. The keys are very stiff so

I don t recommend this to anyone. The only reason I took this keyboard that it is in my budget and have a backlit,

which I needed most.

I have a Micromax MM215FH76 21 inch monitor as my secondary display which stacked up on

couple of old books to adjust the height with laptop stand. Everything is ok with this

monitor except that it don t have a HDMI point and stand is very

weak. I use i3wm and this small script help me to manage my monitor

arrangement.

Now I'm not sure if someone enocountered the same issue, or people just registered

random domains just because they could. I found registrations for

Now I'm not sure if someone enocountered the same issue, or people just registered

random domains just because they could. I found registrations for

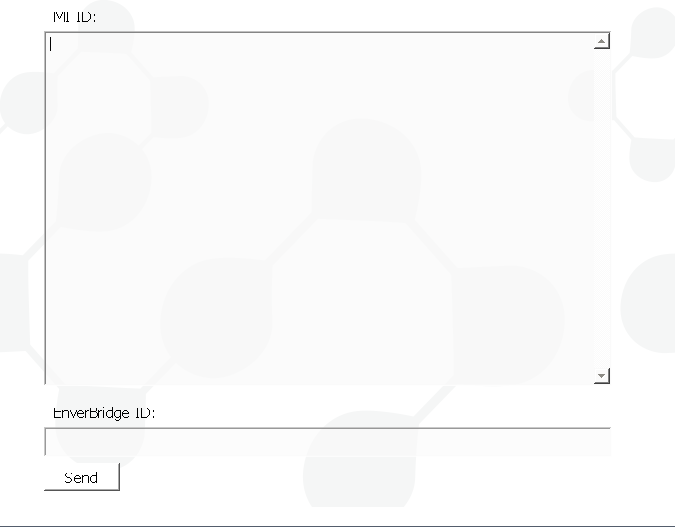

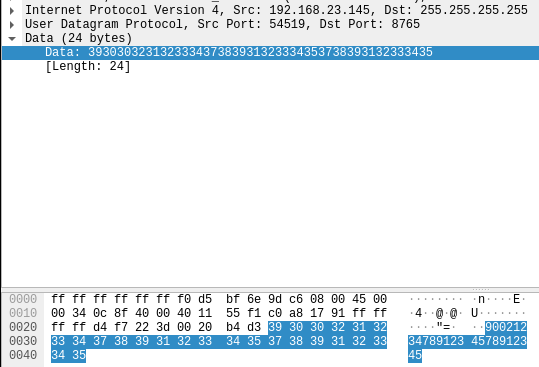

The Network Communication

To my own surprise, the communication is straight forward. A single UDP paket is sent to the

broadcast address (255.255.255.255) on port 8765.

The Network Communication

To my own surprise, the communication is straight forward. A single UDP paket is sent to the

broadcast address (255.255.255.255) on port 8765.

I expected some strange binary protocol, but the payload is just simple numbers. They're a

combination of the serial numbers of the inverter and the ID of the Enverbridge device.

One thing I'm not 100% sure about are the inverter serial numbers, there are two of them, but

on the inverter I've seen the serial numbers are always the same. So the payload is assembled

like this:

I expected some strange binary protocol, but the payload is just simple numbers. They're a

combination of the serial numbers of the inverter and the ID of the Enverbridge device.

One thing I'm not 100% sure about are the inverter serial numbers, there are two of them, but

on the inverter I've seen the serial numbers are always the same. So the payload is assembled

like this: